Classroom Internet of Things

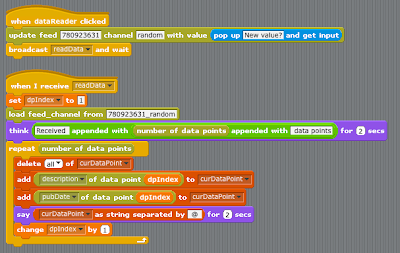

Fragment of Sense script that updates and reads a Xively feed Recently I have been working on getting the Sense platform to work with xively - a cloud service for sharing data from Internet of Things (IoT) devices. This has been in the context of a project funded by the UK's Technology Strategy Board, called DISTANCE: Demonstrating the Internet of School Things – A National Collaborative Experience . The project will use a number of different IoT devices, from scientific data loggers, air quality monitors, weather stations and the OU's Sense board to demonstrate how learning can be enhanced by IoT technologies. Working with schools across the UK, we installing these devices in class rooms, playgrounds (really, wherever the students / teachers want) and making the data streams they generate available a xively feeds. This allows the schools to use each others' data in ways that allows students to carry out experiments using real data gathered from sites a...